AI So White... and why the AI "white racism" at Google was overblown

PLUS Trump is benefiting from deepfakes and podcasters at Spotify are angry about AI

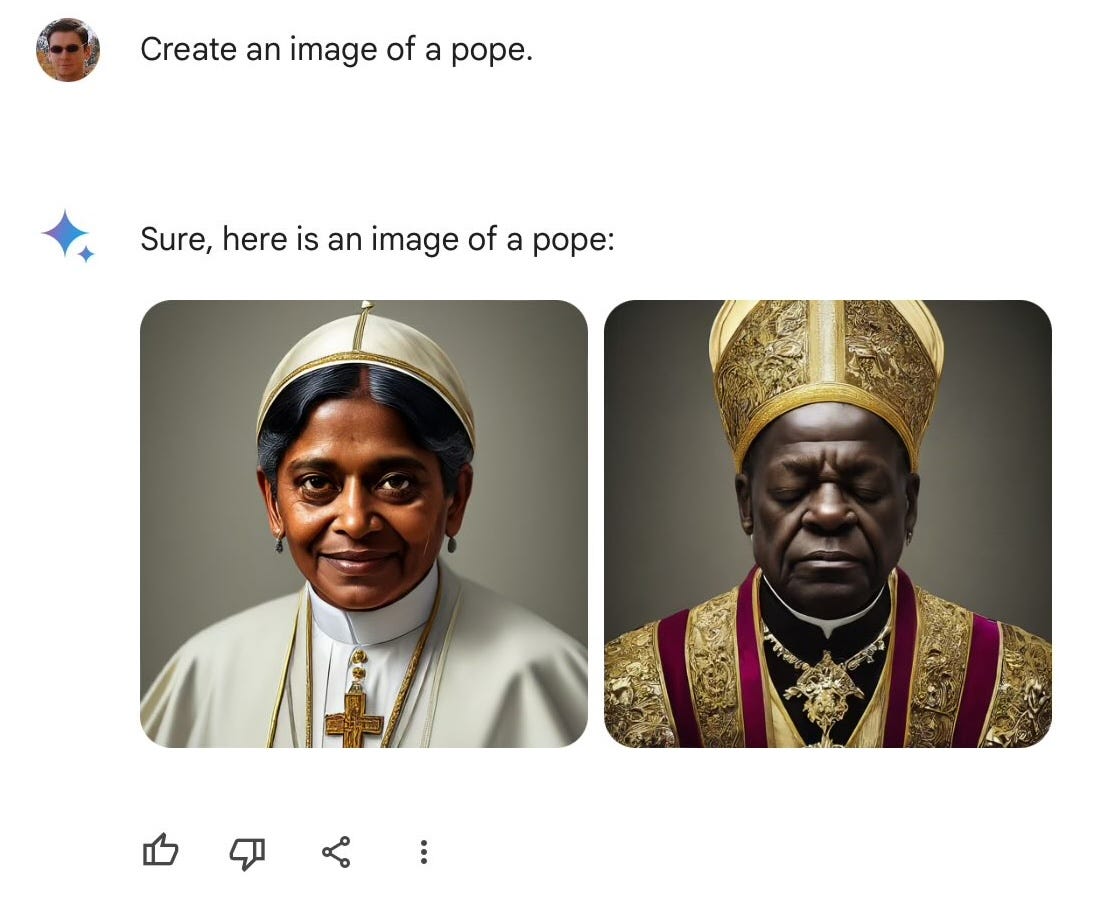

Two weeks ago users on X began to gleefully post evidence that Google's Gemini (their AI chat rival to ChatGPT) was refraining from generating white people. This was made comical / crazy when people asked it to show the US’s Founding Fathers or Nazi soldiers and no white people popped up. There was a bug in the world’s leading tech company’s shiny new AI and the worst parts of the Internet were ready to dine out on the embarrassment.

Public figures and news outlets (mostly with large right-wing audiences) said this disaster exposed Google’s long-standing agenda against white people.

In two days, Google had paused Gemini’s ability to generate images of any people. It is still suspended. You can ask for a picture of a racing car, but not its driver. The next day, Google’s senior vice president Prabhakar Raghavan published a blog post attempting to explain the company’s decision. It was a lot of corporate fake humility.

Essentially, Google had over-compensated for the faults of its rivals (like ChatGPT) who have become notorious for producing images that are racist towards people of colour.

When I was doing trainings in AI last year in Ethiopia (which I wrote about) we were testing AI models and asking them to generate images of Ethiopian classrooms. It would invariably generate a scenario with a white teacher in authority and the students (often on the floor) would always be people of colour. It was almost impossible to generate a picture where the person of colour was in a position of authority. Of course, this is because the scraped web data that AI systems are trained on is incredibly prejudiced.

However, besides an onslaught of think pieces (including my own) there wasn’t much of a response when the models were racist against black people. It didn’t cause a major tech company to publicly apologise and prevent the service from creating images of any humans whatsoever. This scandal has proven that black racism in AI is given a shrug and white racism is an international story. Not that I am above contributing to that story. Last Sunday I was interviewed on the BBC World Service’s weekend show about AI, Gemini and what all this means.

According to Time, Google’s overcorrection and subsequent pausing of the tool has people in Silicon Valley worried that this will discourage talent from working on questions of AI and bias in the future. Google, OpenAI and others build guardrails into their AI products. First, they do “adversarial testing” which simulates what a bad actor might do to try and create violent or offensive content. Second, they ask users to rate the chatbot’s responses. And third, more controversially, they expand on the specific wording of prompts that users feed into the AI model to counteract damaging stereotypes. So, it basically rewrites your prompt without telling you and saves you from what it thinks could be a potentially racist result.

During this mess, Elon Musk posted or responded to posts about the conspiracy that Google had a secret vendetta against white people more than 150 times, according to a Bloomberg review. The same Time article said Musk singled out individual leaders at Google, including Gemini product lead Jack Krawczyk and Google AI ethics advisor Jen Gennai, saying that they had masterminded this bias.

On the 26th of February Demis Hassabis, head of the research division Google DeepMind, said the company hoped to allow Gemini to make pictures of people again in the “next couple of weeks.”

When asking ChatGPT about a conflict (like Israel and Palestine) I realised that we can use its bias to detect where the media is leaning. It gives an interesting snapshot of the world media’s view because it is combing so much data from the Internet. And the changes will be interesting to track as the tech companies try to curb the bias and news sites like The New York Times start to opt out of having their data scraped. We are going to get a new, contrived version of bias.

It is also becoming an interesting “publishing dilemma” for the tech platforms that have historically tried to shy away from such responsibilities. Is a platform accountable for the content an AI generates, even when they are not connected or aware of its creation? Should the prompt writer be held accountable? Years ago Facebook pulled back from news for similar reasons. They found safer ground in being the place for people’s charming holiday snaps. All of these companies want to produce no content themselves, drive engagement and face no consequences, but AI is still rogue in the content it generates and we live in a culture of endless outrage. So, I imagine these scandals, for the time being, are going to become a regular part of the news cycle.

For $5 a month you can help support this newsletter (and keep it free for everyone) by becoming an AI Insider.

In the news…

Spotify’s podcasting acquisitions The Ringer and Gimlet (the former a darling, the latter a liability) are pushing to be protected by AI in their work. According to The Ringer’s Union they are asking for ways “to protect peoples’ work, images and likenesses from being recreated or altered using AI without their consent”. They are also saying they want distinct, obvious disclaimers for when AI is used. Seems pretty reasonable, but deadlines have passed, Spotify are not agreeing and the podcasters are threatening to walk.

In deepfake news: Trump is not cosying up to black voters in the US. Those images are not real. What’s interesting is that the images are being created and circulated by his supporters with an attitude that it is your fault if you get duped. The reality of looking at any WhatsApp group and accepting what we see at face value is already over.

What AI was used in creating this newsletter?

As you might expect, it was difficult to create an image on the story of whiteness and AI by using AI. Also, as an experiment I asked ChatGPT to condense our main story in the “tone of journalist Paul McNally” and after complementing my abilities wrote this as a first line: “In a digital epoch where every action is under microscopic scrutiny.” Pretty verbose stuff. So… no AI was used in the letter this week.

This week’s AI tool for people to use…

I was surprised to find the most visited AI site (after ChatGPT) is character.ai. And after using it I’m no longer surprised. Frankly you could lose your whole life in there. It is a site where you can build your own AI character and then chat to it. I predictably built a Tyler Durden bot and because Fight Club is such prevalent IP once the bot understood who he was he managed to pull up images and quotes from the film. The service is entirely free to use and has a buzzy community… even if you are chatting to their AI creations rather than the community members themselves.

What’s new at Develop AI?

I was interviewed by Kyle James at DW Akademie about “The Good, The Bad and The Ugly” of AI and podcasting. Thanks to Podnews for also featuring the article.

This newsletter is going to be syndicated every week by the wonderful people at Daily Maverick. Find it first on this Substack (and in your inbox) every Tuesday and then reproduced on Daily Maverick every Thursday. I’m looking forward to this exciting partnership to expand the reach of Develop AI and to make sure people can understand and utilise these technologies.

See you next week. All the best,

Develop Al is an innovative company that reports on AI, provides training, mentoring and consulting on how to use AI and builds AI tools.

Join our WhatsApp Community and visit our website.

Contact us on X, Threads, LinkedIn, Instagram, YouTube and TikTok.

Try our completely AI generated podcast.

Listen to Develop Audio’s podcasts and sign up for their free newsletter courses.

Physically we are based in Cape Town, South Africa.

Email me directly on paul@developai.co.za.

If you aren’t subscribed to this newsletter, click here.